Unwisely I got embroiled in an online discussion this morning on the merits of research versus the experience of seeing stuff work with our own eyes. The contention is that although research may have its uses, there is no need to waste time or money researching the “blindingly obvious”.

On the face of it, this would appear to be self evidently true. Why bother testing the efficacy of something we can ‘see’ working? Well, as I’ve pointed out before, we are all victims of powerful cognitive biases which prevent us from acknowledging that we might be wrong. Here’s a reminder of some of these biases:

- Confirmation bias – the fact that we seek out only that which confirms what we already believe

- The Illusion of Asymmetric Insight – the belief that though our perceptions of others are accurate and insightful, their perceptions of us are shallow and illogical. This asymmetry becomes more stark when we are part of a group. We progressive see clearly the flaws in traditionalist arguments, but they, poor saps, don’t understand the sophistication of our arguments.

- The Backfire Effect – the fact that when confronted with evidence contrary to our beliefs we will rationalise our mistakes even more strongly

- Sunk Cost Fallacy – the irrational response to having wasted time effort or money: I’ve committed this much, so I must continue or it will have been a waste. I spent all this time training my pupils to work in groups so they’re damn well going to work in groups, and damn the evidence!

- The Anchoring Effect – the fact that we are incredibly suggestible and base our decisions and beliefs on what we have been told, whether or not it makes sense. Retailers are expert at gulling us, and so are certain education consultants.

In addition to all of these psychological ‘blind spots’ we are also possessed of physiological blind spots. There are things which we, quite literally, cannot see. Due to a peculiarity of how our eyes are wired, there are no cells to detect light on the optic disc – this means that there is an area of our vision which is not perceived. This is called scotoma. But how can that be? Surely if there was a bloody great patch of nothingness in our field of vision, we’d notice, right? Wrong. Cleverly, our brain infers what’s in the blind spot based on surrounding detail and information from our other eye, and fills in the blank. So when look at a scene, whether it’s a static landscape or a hectic rush of traffic, our brain cuts details from the surrounding images and pastes in what it thinks should be there. For the most part our brains get it right, but then occasionally they paste in a bit of empty motorway when what’s actually there is a motorbike!

Maybe you’re unconvinced? Fortunately there’s a very simple blind spot test:

R L

Close your right eye and focus your left eye on the letter L. Shift your head so you’re about 24cm away from the page and move your head either towards or away from the page until you notice the R disappear. (If you’re struggling, try closing your left eye instead.)

So, how can we trust when our perception is accurate and when it’s not?

Worryingly, we can’t.

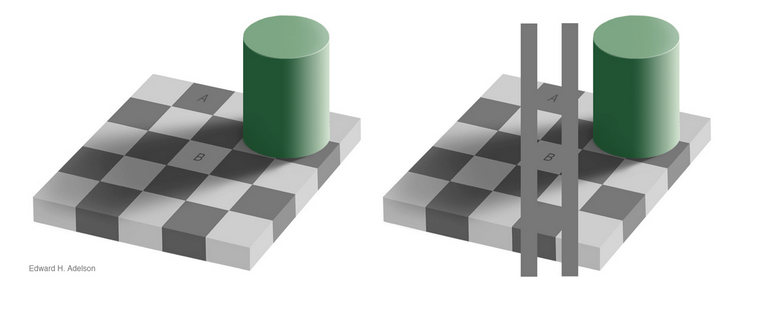

On top of this we also fall prey to compelling optical illusions. Take a look at this picture:

Contrary to the evidence of our eyes, the squares labelled A and B are exactly the same shade of grey. That’s insane, right? Obviously they’re a different shade. We know because we can clearly see they’re a different shade. Anyone claiming otherwise is an idiot.

Well, no. As this second illustration shows, the shades really are the same shade.

How can this be so? Our brains know A is dark and B is light, so therefore we edit out the effects of the shadow cast by the green cylinder and compensate for the limitations of our eyes. We literally see something that isn’t there. This is a common phenomenon and Katherine Schultz describes illusions as “a gateway drug to humility” because they teach us what it is like to confront the fact that we are wrong in a non-threatening way.

How can this be so? Our brains know A is dark and B is light, so therefore we edit out the effects of the shadow cast by the green cylinder and compensate for the limitations of our eyes. We literally see something that isn’t there. This is a common phenomenon and Katherine Schultz describes illusions as “a gateway drug to humility” because they teach us what it is like to confront the fact that we are wrong in a non-threatening way.

Now clearly there are times when we absolutely should accept the evidence of our own eyes over what we’re told. Despite her advocacy for research, @Meetasengupta suggested a great example: “If I see a child being slapped, but research says kids are safe in nurseries, I’ll believe my eyes.” But we would be foolish indeed to believe to draw any conclusion about all nurseries or all kids based on the evidence of our own eyes. And as @nastyoldmrpike points out, for the most part anecdotal evidence is an oxymoron.

So should we place our trust in research or can we trust our own experiences? Well, maybe. Sometimes if it walks like a duck and sounds like a duck, it’s a duck. But we’re often so eager to accept that we’re right while others must be wrong that it’s essential for anyone interested in what’s true rather than what they prefer to take the view that the more complicated the situation, the more likely we are to have missed something.

Sometimes when it looks like a duck it’s actually a rabbit.

It is however entirely reasonable to ask for stronger evidence when findings conflict with common sense and our direct observations. The burden of proof should always be with those making claims rather than with those expressing quite proper scepticism.

Katherine Schultz says that our obsession with being right is “a problem for each of us as individuals, in our personal and professional lives, and… a problem for all of us collectively as a culture.” Firstly the “internal sense of rightness that we all experience so often is not a reliable guide to what is actually going on in the external world. And when we act like it is, and we stop entertaining the possibility that we could be wrong, well that’s when we end up doing things like dumping 200 million gallons of oil into the Gulf of Mexico, or torpedoing the global economy. Secondly, the “attachment to our own rightness keeps us from preventing mistakes when we absolutely need to, and causes us to treat each other terribly.” But an exploration of how and why we get things wrong is “is the single greatest moral, intellectual and creative leap [we] can make.”

Here she is talking about being wrong:

So difficult to give up the notion of common sense or faith in a simplistic world but research beats observation!

This is still rankling after 24 hours. In a good way. Observation is where we start; and by observation all sensory inputs must be included not just the visual implied. However the world is more complex than we realise. Humans think best in linear rather than exponential terms and the world is often exponential (after Kahneman, Taleb and others)The other video to include here for those who haven’t seen it already is the Simons and Chabris ‘inattentional blindness’ test. Thanks for this post David. Lots of meal time discussion and thoughtfulness 🙂

The Invisible Gorilla vid is in the post I’ve linked to. Thanks

Great post and one which should strike a chord with anyone who has tried to change anything.

It is said “There is nothing common about common sense” and I would add “When you do find it it is not by the bucket full but by the thimble full”. Now where did I put that duck?

It was really a great post, keep up the good work 🙂

I agree that when someone cites common sense what they actually mean is “what I think”. However, I don’t think we should dismiss our experience, or simply give ‘research’ the kind of primacy that will lead us to become people who simply implement ‘what the research tells us’.

Firstly the research can’t tell us ‘what works’, only what has worked in some contexts. Secondly who decides what qualifies as research? Do we use the Goldacre gold standard, or are we also interested in qualitative methods of collection and analysis? Can we generate evidence in our own classrooms?

You’re right, of course, we need to be sure only of one thing – that even our most deeply held opinions should be open to examination, but sometimes it will be the evidence of our own eyes that helps us to do it.

I completely agree and certainly not advocating blind adherence to research findings. All I’m suggesting is that we when we most think we’re right, we’re most in danger of being spectacularly wrong.

yup – agree 🙂

Hmmm. Sounds like Sod’s Law. Any research to show its validity?

As David says, research can never tell teachers what to do, but it can sometimes distinguish between what’s obvious and true, and that which is obvious and wrong. As Josh Billings once said, “It ain’t ignorance causes so much trouble; it’s folks knowing so much that ain’t so.”

[…] Unwisely I got embroiled in an online discussion this morning on the merits of research versus the experience of seeing stuff work with our own eyes. The contention is that although research may have its uses, there is no need to waste time or money researching the “blindingly obvious”. On the face of it, this […]

Cognitive bias etc is just the term researchers of a certain orientation use to explain away subjectivity. We are all constructing the world subjectively. That is also true of researchers. Even the explanation of a causal event can be subjective, even if that which they are describing, is not.

It’s not that there is an objective world out there we are getting wrong but rather we are constructing that world as we interact with it. Whether you believe there is a relationship (or correspondence) between that construction and reality is an ontological issue; realists say yes whilst relativists say no.

Educational Research really should be the point at which we all collate data and collaborate. Often it isn’t because educational research has become (always has been) , a “thing in itself”. In other words, often, research fields themselves become a self fulfilling prophesy. Learning styles being a case in point and there are others related to computing, I can think of adaptive educational technologies as an example. In other words they become a discourse that has become disengaged from that which they are researching. Sometimes, no doubt, that is necessary where the research is purely speculative or theoretical..

The problem for practitioners, in my view, is that educational research is not sympathetic to practice although they would, no doubt, claim to be so. The field of research, like any human endeavour has issues, which practitioners need to start addressing (albeit I thing a number are, including yourself).

The issue it seems to me is not which is best, anecdotal evidence or research evidence, because one, is not necessarily apart from the other but that both are equally useful. One feeds the other and visa versa. Qualitative techniques are well versed in acknowledging subjectivity.

I think that the separation between, the situated context and research is a false dichotomy, which persists because research needs to maintain its aura of expertise in order to justify its position.

The power of educational bloggers will inexorably impact upon that, a fact I guess Dylan Wiliam has long since accepted. The fact that learning styles theorists pretty much made up a field and vindicated it for song says much about the field of research, and that of practice. Practitioners have to understand, and engage, with research to stop it happening again or at least use the tools in a more appropriate way..

The term cognitive bias is not that helpful, we simply subjectively see what we want to see. And no doubt it is as real, and meaningful, as any other thing. That is not to take a relativist position, and suggest that there is no external reality but rather that cognitive bias suggests that there is a way not to be cognitively biased, And that is not really possible.

Phew that was a bit of a waffle ho hum

I’m glad you’re not taking a relativist position as that would be a bit silly 🙂

That you find cognitive bias “not that helpful” a term is, I guess, your subjective reality. I find it very helpful to understand the many and various ways I’m likely to jump to assumptions that turn out to be seriously flawed. Sadly, as Emily Pronin points out, knowing this doesn’t solve the problem: file:///Users/didau/Downloads/climateponinlinrossbiasblindspot.pdf

“a fact I guess Dylan Wiliam has long since accepted.”

Reading that back it implies that DW at one time, didn’t accept it. I’m not suggesting that at all rather I presume from comments that he believes in a re-contextualising of the research – practice relationship.

[…] on perception, which is notoriously unreliable. David Didau has blogged about some perceptual flaws here. He also mentions some of the cognitive errors that occur when people draw conclusions from […]

[…] https://www.learningspy.co.uk/featured/can-trust-evidence-eyes/ […]

[…] We can know whether a thing is true by consulting the evidence of our senses. This might work for certain quotidian truths, but I’m not sure it works for anything much beyond this. Sometimes we cannot trust the evidence of our own eyes. […]

[…] of a low-validity domain is to accept how routinely and regularly we are wrong. This is why I like illusions so much: they are a gateway drug to humility. As the philosopher Simone Weil puts it, “the […]