The cost of bad data is the illusion of knowledge. – Stephen Hawking

Schools, as with almost every other organ of state, are increasingly obsessed with big data. There seem to be two main aims: prediction and control. If only we collect and analyse enough data then the secrets of the universe will be unlocked. No child will be left behind and all will have prizes.

Can we learn from the past?

No. Or at least, not in any way that helps. We can see trends, but these are far more likely to be noise than signal. When exam results are rising we take credit and when they fall we wring our hands but Ofqual say that, depending on subjects, GCSE results are likely to vary from anywhere between 9 – 19% annually! What can we helpfully learn from this sort of volatility?

In Antifragile, Nicholas Nassim Taleb says,

Assume… that for what you are observing, at a yearly frequency, the ratio of signal to noise is about one to one (half noise, half signal)—this means that about half the changes are real improvements or degradations, the other half come from randomness. This ratio is what you get from yearly observations. But if you look at the very same data on a daily basis, the composition would change to 95 percent noise, 5 percent signal. And if you observe data on an hourly basis, as people immersed in the news and market price variations do, the split becomes 99.5 percent noise to 0.5 percent signal. (p.126)

The more data you collect and the more you try to analyse it, the less you are likely to perceive. Looking at the past leads us into believing we can control the present.

Can we control the present?

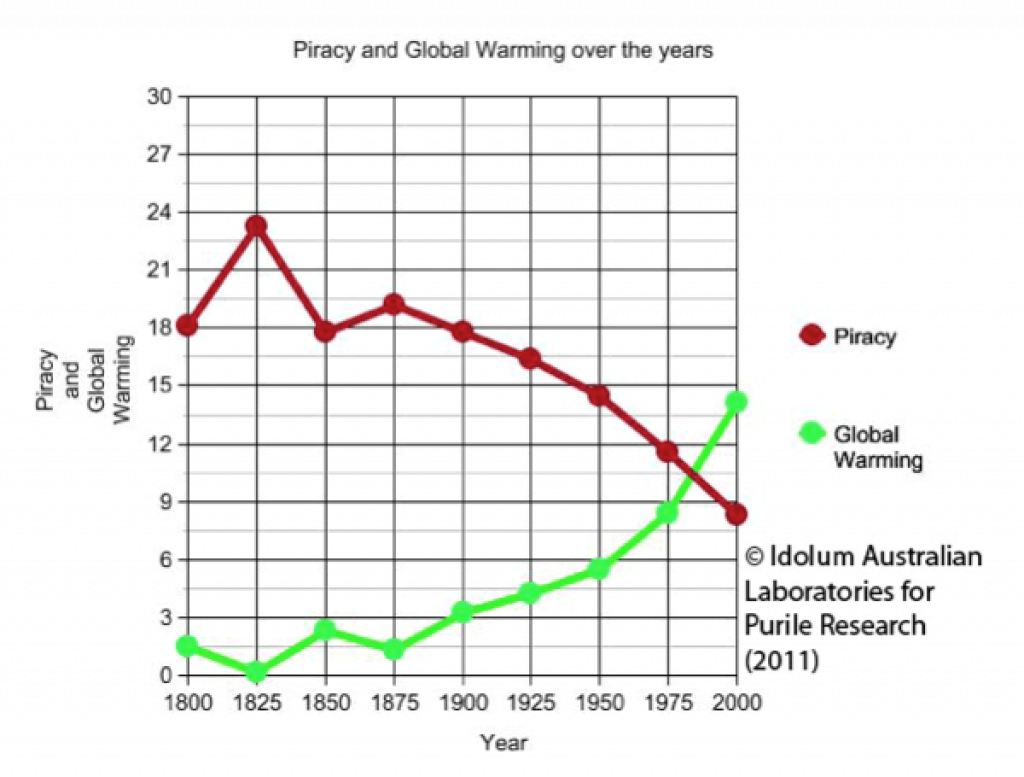

No. Or at least, not with any reliability. Stuff just happens. We think we can see causation, but all we ever see are correlations. Some correlations are implausible so we dismiss them:

(And, just for fun, have a look at these examples of our supposed ability to perceive causality from Belgian philosopher, Albert Michotte.)

Others seem logically connected so we pay them great heed. This leads us to see things that aren’t there, like for instance the ‘fact’ that sex is a significant factor in achievement.

We can check to see whether teachers and students are compliant, we can check to see whether they’re performing as we might want or expect, but we can’t read minds and we can’t measure learning. We can, of course, make inferences, but these are only really valuable if we’re clear on the very real distinction between learning and performance. This muddled thinking leads schools leaders to say things like, “80% of the teaching in our school is good or better.” Is it? How do you know? What they really mean is that 80% of lessons meet with their approval. This is not the same thing. But it makes us believe we can predict the future.

Can we predict the future?

No. Or at least, not with any accuracy. What we can do is make a prediction and then have a stab at working out the probability that our guess is accurate given the data we have. But this isn’t very sexy so we fool ourselves into imagining that our data is precise and accurate, and then trying to work out the probability that our predictions are true. We routinely say things like, “Jamie will get an B grade in maths.” And then attempt to work out the likelihood the prediction is accurate. What we should be doing is saying, “Jamie achieved a B grade in his last maths test,” and then trying to work out the likelihood this result was accurate.

Here’s how the problem looks when applied to the real world:

- I watched Eastenders last night. What’s the probability I watched BBC1?

- I watched BBC1 last night. What’s the probability I watched Eastenders?

See the problem? 1 is meaningless and 2 is strictly limited.

No amount of data can accurately describe the complexity of human systems. Maybe the data we collect at Christmas might help us to predict GCSE results with a reasonable amount of accuracy, but data collected 5 years previously is much less reliable as the margin of error only grows with time: inspection frameworks change; external assessment are changed mid- year; teachers leave, new teachers arrive; students experience spurts and declines. Reality gets in the way of our careful calculations.

Because we’re so poor at dealing with uncertainty, we struggle to accurately forecast the future. All prediction is based on probability; these probabilities are risks which we calculate by analysing historical data. Uncertainty though, is different to risk. A risk describes the possible range of all known outcomes and tries to calculate the likelihood of each occurring. All too often, we don’t even know that an outcome is possible, let alone the probability of it occurring. Data management systems can only tell us what has happened in the past (And we’re not even very good at interpreting that!) If the future is different to the past – as it inevitably will be – any forecast we make will be wrong.

The conclusions we might draw from all this are:

- You don’t know what you don’t know so avoid believing you can explain complex events.

- The more data you collect the less meaningful it will be.

- You cannot predict the future, but you can calculate how good your data is.

1.You don’t know what you don’t know so avoid believing you can explain complex events.

2.The more data you collect the less meaningful it will be. Less is more.

3. You cannot predict the future but you can calculate how good your data is

2. I agree. Only gather the data you can manage (it costs time, money, energy to do this). To collect less know why you are collecting data and what you intend to do with the data. Also make sure the quality of the data is good enough to use (verify the integrity of the systems and the people collecting your data, validate the data itself).

1. In well organised organisations you can use data with context to explain complex events. In education it seems data is massaged and manipulated so that it’s worthless, and lack of training and competency in assessment which raises questions over the quality of the data being provided. If data is corrupted or questionable then of course you can’t use it to understand the past.

3. Forecasting. As you say, it’s all probability. But forecasting reliability improves if the analyst understands the variables very well and the data.

I really don’t believe you can explain complex events by analysing data – we just think we can. This is mainly due to the distorting effects of hindsight bias which makes us credulous about the power and control we are capable of exerting.

I think this might be missing something. Really big data (that is, lots and lots of data) can be more useful then a small amount of data. The issue is rather fit-for-purpose of the data. So, in the stock market example, the volatility (or noise) is high, but collecting data over a longer period of time means that the underlying trends become clearer (as the noise tends to cancel itself out: detecting the Higgs Boson is a similar example of this). Sample size is most pertinent here, I think. So, in one school (or even more, in one cohort in one school) the number of data points (students) is relatively small compared to the variation in the individuals (humans are complex, and so differences can be large). Hence, data collected on one cohort might be meaningless, whereas data collected on all the cohorts across the country might be more meaningful (but impossible to use at the level of the individual).

Yes, and herein lies a different issue I would argue on the of datasets in education – and the underlying use of the positivistic approach esp. RCTs in that the data sets you might gather and on which you might perform appropriate statistical operations will possibly give us trend data and probability samples but given the complex and ever changing nature of the sample (the pupils) this will be essentially meaningless at smaller level e.g. the pupil and probably the class and the school – which are the sample sizes that interest teachers, heads and schools.

Actually, I don’t think the statistical sampling of RCT is meaningless. I think large scale trial allow us to make meaningful & measurable predictions as to how a given cohort is likely to respond to an intervention.

Yes but a given cohort is not the same as the class or the individual – it gives your probabilistic samples not individualistic samples.

By cohort I mean class. We can generalise probabilistically.

OK – here’s a brief question that’s been bugging me: What if the majority of studies in Hattie’s meta-analyses were badly carried-out in the first place…? Has he said that he quality-controlled each study first to ensure that they were RCTs…?

No, there’s little or no quality control on the data sets in Visible Learning. Quantity not quality

Should that cause any pause for thought? Is the assumption that the number of false positives and false negatives would simply balance out?

I’ve written here about some of my concerns: https://www.learningspy.co.uk/featured/unit-education/

I think school managers often forget that collecting lots of data can help make predictions – but those predictions only apply to large samples, not a class set. You can’t necessarily link 80% progression last year for the cohort to an 80% expectation for one class or teacher. As you say, the noise overwhelms the signal.

If only we could do an experiment (and ‘risk the life chances of a cohort’ according to management speak). Let’s not set a year group any targets. Let’s not track their progress. let’s spend all our time and energy teaching them. Obviously feedback, encouragement, etc, but not the time wasting stuff – well what you need to do to get from a C to a B is blah blah, and you’re doing ok but maybe your target grade was too high/low’ etc [remember target grades are a fiction often from FFT]. Tell them to keep on doing their best etc. Teachers have enough subject expertise to know whether or not a particular maths exercise is within reach of a student. Anyway my guess is that there would be very little difference in the results. And because teachers and students are less stressed, they may even be slightly better. The problem is that no-one is ever going to do this experiment. Any ideas?

Worth noting that there are very different practices in schools. For reasons quite complicated I currently work across 2 schools and the approaches to data are massively different:

School 1:

Staff are given, at the end of Year 9, the “chance tables” for each student taking their subject at KS4 and asked to use the “chance table” and all their other knowledge about the student to set a target.

During KS4 teachers submit forecast GCSE grades 4 times (2 in Y10 and 2 in Y11).

These grades are compared to the staff set target. If a student is “off the pace” across the board then that *might* indicate something going on with that student and that some additional support *might* be necessary. The Head of Year and the tutor team use this data as a piece of information to put into their mix and no more.

Heads of Subject get the information split up by class. If there is a class with lots of students “off the pace” it *might* indicate something going on in that class. The Head of Subject has a chat with that teacher to see that all is OK. Sometimes this apporach flags up an issue that the teacher, for whatever reason, has not mentioned (eg. a combination of students that just isn’t working out) and a solution is found. Sometimes there is nothing untoward. Sometimes their is.

At the end of the GCSE courses a teachers accuracy of forecasting (from the final KS4 report) and the exam performance of the students compared to their targets are reviewed. Not in any ‘heavy handed’ way but informally.

If a teacher sees, year on year, irrespective of their class, that their forecasting is awry and/or that students are performing significantly differently from the targets (in either direction) then it *might* indicate that something unusal or different is going on (eg. really effective teaching approaches that could be shared).

One year of data tells us nothing. Two years next to nothing. You need several years of data to begin to even possibly see something significant. You do, occassionally, see something though so it is worth doing.

Staff are comfortable with the use of data in school – it is managable and is widely seen to be effective.

School 2:

Staff are provided with targets by SLT. These are not explained and most staff have no idea of where they came from. It might as well be magic! (It happens to be the modal grade from FFT)

Teacher submit forecasts once per half term throughout KS4.

These grades are compared to the SLT set targets. If a student is “off the pace” the class teacher is expected (in writing) to explain how they intend for each individual to get back up to speed.

Heads of Subject are provided with reams and reams of data for their subject at each reporting point – % of students making 3 Levels of Progress KS2-4; 4 LOP; 5 LOP; gender analysis; progress of PP students; SEN progress; FSM progress; A*-A percentage; A*-C percentage; Average grade; …. – they must then account for anything that is lower than the targets set by SLT and produce a plan (in writing).

Heads of Year receive similar violumes of data and are expected to put things in place to address any students who are “off the pace”. There are a bewildering number of interventions that take place. All are logged/tracked such that everyione can see the intervention/support that any student has received at any time during KS4.

Staff are uncomfortable with the use of data in school. It is seen as getting in the way of teaching.

Both School A and School B are currently rated “Good” and both have Value added that is >1000 (or Progress 8 that is > 0).

We must therefore be extremely wary of assuming that the data practices in one school are the same (or very similar) to others. Obviously those staff who work in schools similar to A tend not to be as vocal on twitter; blogs and the like so people can get a “false” sense of what is typical (although speaking to people around schools approaches such as school A are in the minority – but not a small minority).

A quick note about FFT. They don’t produce targets for individual students (despite what people say). The look at the achieved GCSE results for students nationally and say things as simple as “of students who gained a 5b in KS2 Maths and 4a in English – 1% achieved a G at GCSE Geography; 2% a F; 6% an E; 11% a D; 30% a C; 22% a B; 15% an A and 13% A*”.

For any given starting point there is quite a spread of grades so doing any “calculation” (whether mean or mode) hides the variability – but the data can be useful for a teacher if handled carefully. Too many schools do not.

a reply to Steve (sorry didnt use the right reply button just now

You make a good point that people in school 2 are more likely to blog etc. Fair point. I would however balance this by saying a lot of staff in school 2 are too knackered from data to be aware of excellent blogs such as DDs. In response to your point about ‘estimates’ from FFT please see this analogy (they are complicit in the problem) https://joiningthedebate.wordpress.com/2015/11/13/fft-data-and-drink-driving/

Also school can choose what type of ‘estimates’ they want ie my school wants to be in the top so many percentile (don’t want to identify my school exactly) so has obtained higher ‘targets’ accordingly. I must compliment Jack Marwood who has done a lot to try to understand Fischer

http://icingonthecakeblog.weebly.com/

scroll down for the 3 articles (you may know these already as there is a Steve in the comments)

I myself have had a go at getting hold of some of the algorithms they use. I asked to be given as much maths and stats as they wanted (dont hold back) rather than vague statements that are given to non maths specialists Their ace card is to say that they cannot release their algorithms because of Intellectual Property issues. Not a transparent organisation at all!

Thanks for your reply – and yes I am the Steve who commented on Jack’s blog.

To be honest I am torn with FFT – between the proportionate use and benefit I see in school A and the unnecessary and inappropriate use (with negative consequences for staff & students) in the other.

So the question – how do we stop the situation in school B without preventing the good use in school A?

Hello Steve (small world!)

going back to my original ‘experiment’…let’s scrap the use of FFT etc and just see what happens. I’m game.

PS

I have no problem with ‘small data’. That is – a class teacher should have some way of identifying effort and attainment, and be able to spot students at the top and bottom end,

My confirm follow has expired so I hope this will create a new one. This is definitely a post to follow!

a reply to Steve.

You make a good point that people in school 2 are more likely to blog etc. Fair point. I would however balance this by saying a lot of staff in school 2 are too knackered from data to be aware of excellent blogs such as DDs. In response to your point about ‘estimates’ from FFT please see this analogy (they are complicit in the problem) https://joiningthedebate.wordpress.com/2015/11/13/fft-data-and-drink-driving/

Also school can choose what type of ‘estimates’ they want ie my school wants to be in the top so many percentile (don’t want to identify my school exactly) so has obtained higher ‘targets’ accordingly. I must compliment Jack Marwood who has done a lot to try to understand Fischer

http://icingonthecakeblog.weebly.com/

scroll down for the 3 articles (you may know these already as there is a Steve in the comments)

I myself have had a go at getting hold of some of the algorithms they use. I asked to be given as much maths and stats as they wanted (dont hold back) rather than vague statements that are given to non maths specialists Their ace card is to say that they cannot release their algorithms because of Intellectual Property issues. Not a transparent organisation at all!

not entirely related but not unrelated either – I have been listening to reports and inquiries and all sorts of gubbins as to how Pollsters got the results of the General Election wrong. Did they ask too many people? too few? the wrong kind? only one kind? not enough old people? only really left-of-centre people?

They must have been swimming in collected data, analysed it until the proverbial cows came home, drawn graphs, created pie charts, coloured in maps of the country, crunched some very big numbers, looked back at previous elections, etc etc. All to predict an unknowable future.

What I find amazing is not that they got it wrong, but people are surprised that they got it wrong. All these people confusing prediction and data with actual facts and reality. Silly sausages.

And I can’t help but think we are quite similar in schools. Ooo, how did he do on an end of unit test? oooo how did he do when I asked him about things? how did he do last term on a similar test. I know, from all that information, he’ll almost definitely come out of the exam with a Hedgehog.

What? He came out with a Badger? I am very surprised. I definitely knew it would be a Hedgehog. Why didn’t he get a Hedgehog? What happened? How did this unknowable, unpredictable, highly variable human being not do exactly what I thought he would?