I want you to conjure up the spirit of one of your primitive ancestors. Picture yourself hunting for food on the savannah or in a primordial forest. Imagine, if you will, that you catch a glimpse of movement out of the corner of your eye. Is it a snake?

Although you can’t be sure, the only sensible option is to act with certainty, assume that there is a snake and takes immediate steps to avoid it. We’re primed to act with certainty on minimal information. This incredibly useful survival instinct has served the species well for countless millennia. If ever there was a tendency amongst some of our ancestors to thoroughly test our observations and have a good root around in the undergrowth to check if we really did see a snake, they did not survive to pass on their genetic material. We’re descended from those who didn’t check.

Acting decisively with minimal information may have served us well in the hostile environments of the past, but it is a less successful strategy in a relatively safe environment. A school is a relatively safe environment; teachers are unlikely to die as a result of their decision making which means it’s much safer to really test our predictions and find out whether we’re correct to believe as we do. However, because we’ve evolved a preference for not checking, we continue to act as if we’re in a hostile environment.

The metaphorical hunting ground represents sources of evidence, and the snake represents the possibility that we might unearth evidence that contradicts our cherished beliefs. Although finding contradictory evidence won’t hurt us physically, it may still be a threat to our pride. This, we tell ourselves, is the correct way to run a school, to manage a class, to teach this subject, to interact with students. How do we know? Because we just do. Often we have a lot invested in our beliefs – time, credibility – and if we find evidence that we might be wrong, then we run the risk of looking foolish. So we’d rather not check.

The consequence of certainty is that we stop thinking. Why would we think about something if we already knew the answer? If we don’t stop to thoroughly test our beliefs then we’re sure to be wrong at least some of the time. The trouble is, no one likes a ditherer. Just look at the way we treat politicians when they’re asked a tricky question. We’re desperate for them to respond with quick answers and easy certainty. If they were to admit they weren’t sure, we’d be contemptuous. The same pressures are at work everywhere: if you’re in a position of responsibility, everyone will expect you to just know what to do and to make rapid decisions with no hint of doubt.

I’m under no illusion that our intolerance for uncertainty means that there will always be limited time for deliberation, but unless we accept we’re often wrong, we’re likely not only to make poor decisions but to hide the evidence of our mistakes from ourselves.

Have a look at the image below:

This is Roger Shepard’s Turning the Tables illusion. If you were asked to select one of these tables to manoeuvre through a narrow door way you’d probably pick the one on the left. It looks narrower, right? Well, in fact, both tables have identical dimensions. The illusion is an example of size-constancy expansion – the illusory expansion of space with apparent distance. The receding edges of the tables are seen as if stretched into depth. But, as human beings, we can’t see that – the only way we can see that the table tops are identical is to rotate the images (or get out a ruler and measure the tables.) Because seeing is believing, we’re seldom inclined to test that what we perceive conforms to objective reality. Instead, we catch glimpse and then act with certainty.

Our minds are programmed to see patterns even where there are none. We see creatures in clouds, faces in wallpaper and patterns in data that just do not exist. Have a look at Joseph Jastrow’s Duck/Rabbit illusion:

The beak of the duck becomes the ears of a rabbit. Although you can quickly and easily perceive both creatures, you have to make a choice as to which you will see at any given point. It’s often the case that when we argue about our beliefs we’re looking at the same thing but I’m arguing it’s a duck while you swear blind it’s a rabbit. It’s both and neither. It doesn’t take an enormous amount of critical thinking to accept that ducks and rabbits don’t really look like this.

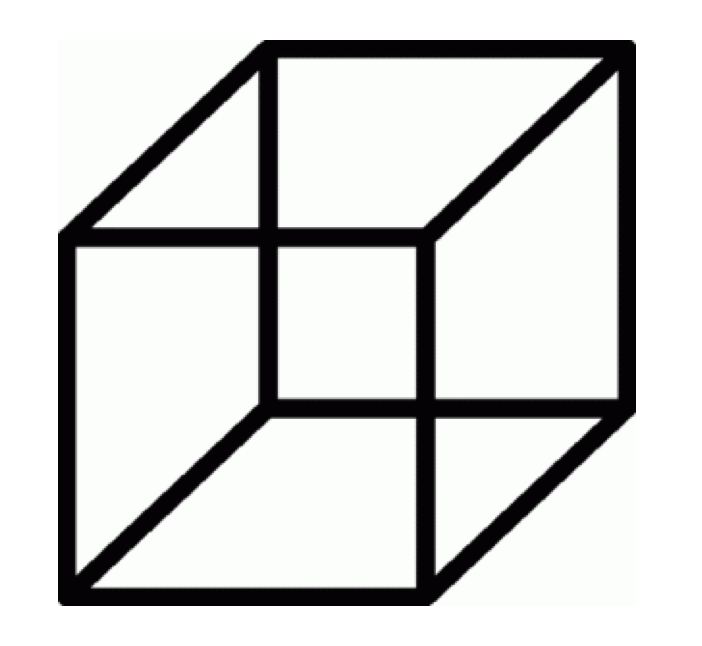

Here’s one final image to have a look at:

This is the Necker Cube. If you don’t know about it, you’re first thought is probably to dismiss it as uninteresting. After all, you’ve seen hundreds of 3D representations of cubes, right? But stare at it intently for about 5 seconds and see if you notice anything unusual happen?

The back wall suddenly pops and becomes the front! If you cary on looking it will eventually pop back. The slightly clunky analogy to make here is that if we look at anything with a sense of certainty and familiarity we’ll probably see exactly what we expect. But, if we look with fresh eyes and a sense of openness, sometimes we’ll see things we didn’t expect.

The world is far more complex that our limited perceptions of it allow us to see. We’re all regularly and often wrong. The preference for certainty may have served our distant ancestors well but it gets in the way of good decision making in the modern world. The better we are at admitting we’re not sure, that evidence is always contingent, that the world is greater than we can grasp, the less likely we are to get entrenched in our inevitable mistakes.

Constructive feedback is always appreciated